Humans may be more likely to believe disinformation generated by AI

A new study suggests that disinformation generated by AI may be more convincing than disinformation written by humans. The research, led by Giovanni Spitale at the University of Zurich, found that people were 3% less likely to spot false tweets generated by AI than those written by humans. This credibility gap is concerning as the problem of AI-generated disinformation seems poised to grow significantly.

The study tested susceptibility to different types of text by asking OpenAI’s large language model GPT-3 to generate true and false tweets on common disinformation topics, including climate change and COVID. These were compared with a random sample of true and false tweets from Twitter. Participants were then asked to judge whether tweets were generated by AI or collected from Twitter, and whether they were accurate or contained disinformation.

The researchers are unsure why people may be more likely to believe tweets written by AI. However, the way in which GPT-3 orders information could have something to do with it. Spitale notes that GPT-3’s text tends to be more structured and condensed than human-written text, making it easier to process.

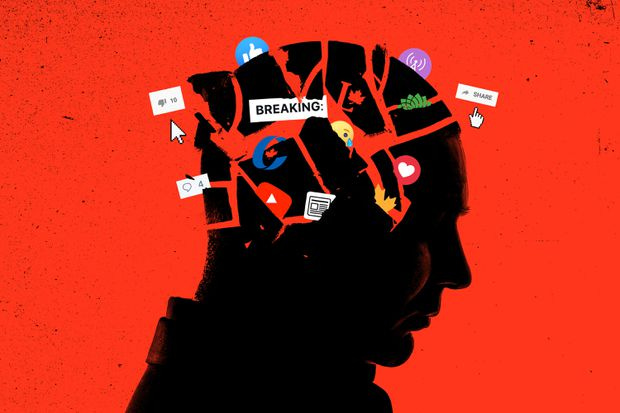

The generative AI boom puts powerful, accessible AI tools in the hands of everyone, including bad actors. Models like GPT-3 can generate incorrect text that appears convincing, which could be used to generate false narratives quickly and cheaply for conspiracy theorists and disinformation campaigns. The weapons to fight the problem—AI text-detection tools—are still in the early stages of development, and many are not entirely accurate.

OpenAI is aware that its AI tools could be weaponized to produce large-scale disinformation campaigns. Although this violates its policies, it released a report warning that it’s “all but impossible to ensure that large language models are never used to generate disinformation.” Further research is needed to determine the populations at greatest risk from AI-generated inauthentic content, as well as the relationship between AI model size and the overall performance or persuasiveness of its output.

In conclusion, this study highlights the potential dangers of AI-generated disinformation and underscores the need for continued research and development of tools to combat this growing problem. As AI technology continues to advance at a rapid pace, it is important for society to remain vigilant and take proactive measures to prevent the spread of false information.